5 approaches to building LLM agents (and when to use each one)

Michael Douglas

Sr. Product Marketing Manager

Explore five common approaches to building LLM-powered agents—wrappers, conversational platforms, automation tools, developer frameworks, and unified platforms—and learn when each is best for your business needs.

The enterprise race to deploy AI agents is on, but no one is sure where the starting line is. With the explosion of different frameworks, platforms, and tooling in the AI space, the amount of product options and deployment pathways is overwhelming. The right approach often depends on your end goal, whether you’re automating a detailed document analysis workflow, building an internal coding assistant, or designing a customer-facing support agent.

Should you use a low-code bot builder? A developer-first agent framework? Build your own logic on top of GPT-4 or Claude? Or go all-in with a unified platform?

There’s no universal “best” option. It depends on your business requirements, the complexity of your use case, the skills of your team, and your existing tech stack.

Here’s a breakdown of five common approaches to building LLM-powered agents, when they work best, and what to watch out for.

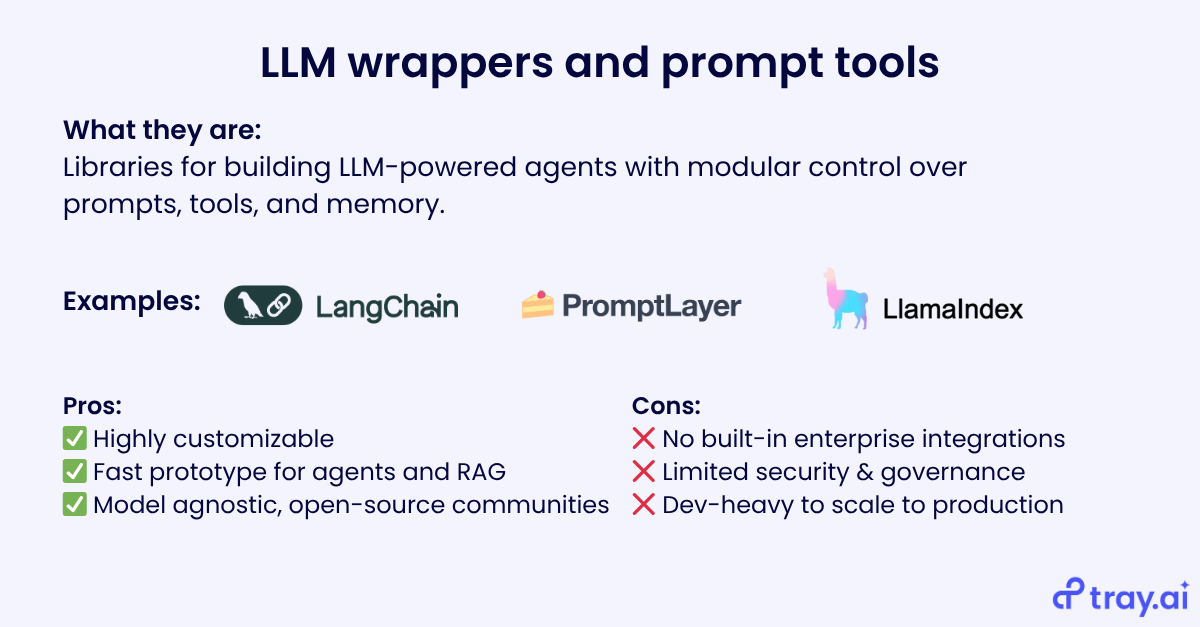

1. LLM wrappers and prompt tools

Best for: Developers who want fast, flexible prototypes for experimentation. Typically not production use

LLM wrappers like LangChain, LlamaIndex, and PromptLayer are libraries designed to help developers build LLM-powered applications more efficiently. Instead of calling the raw API for GPT-4 or Claude every time, wrappers let you define prompts, chain model calls, add memory, and integrate tools, all as modular components.

These tools are typically model-agnostic, meaning you can switch between providers like OpenAI, Anthropic, Google, or open-source models by updating credentials or endpoints.

Pros

High flexibility and customization

Fast prototyping for agents, RAG, and tool use

Active open-source communities

Cons

No built-in integration to enterprise systems

Little support for governance, security, or reliability

Requires significant development effort to scale (which can quickly increase costs)

LLM wrappers are useful if you’re exploring agent behavior or need to experiment across multiple models. But in production settings, especially those involving enterprise data and systems, they often need to be paired with additional infrastructure for integration, orchestration, and compliance.

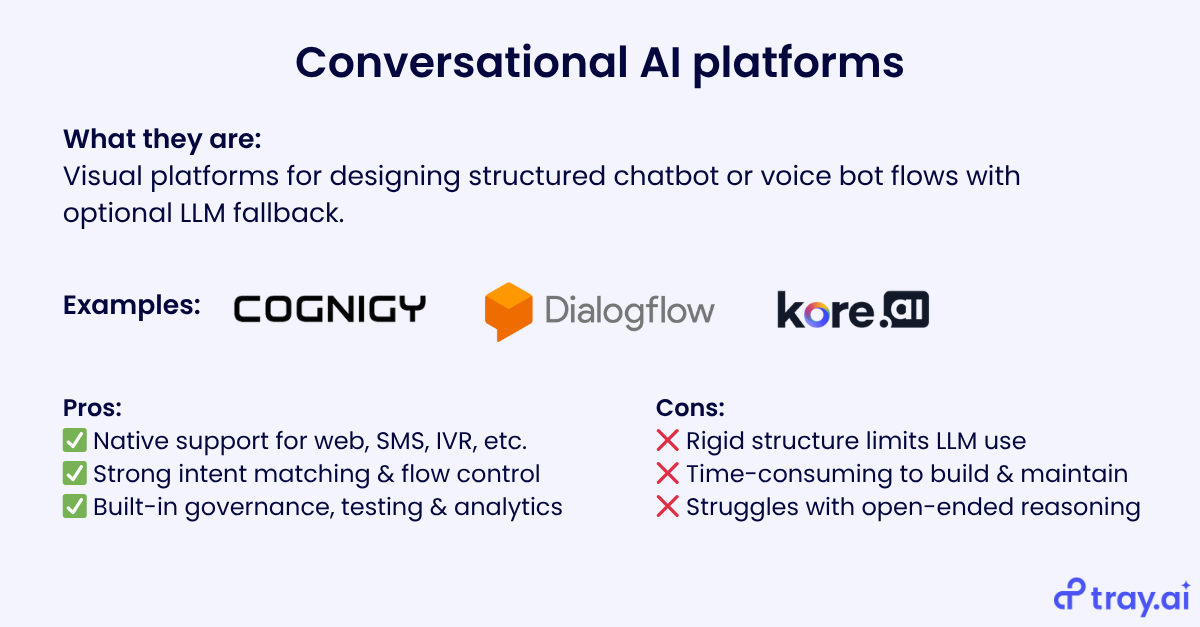

2. Conversational AI platforms

Best for: Structured support flows where consistency, routing, and channel coverage are key

Conversational AI platforms like Dialogflow CX, Kore.ai, and Cognigy are built for managing structured interactions, usually in the form of chatbots or voice bots. They give you a visual interface to define intents, handle user input, and guide conversations step by step through predefined flows.

Many of these platforms have added support for LLMs like GPT-4 or PaLM. The typical pattern is fallback generation: if a user asks something that doesn’t match a predefined intent, the system calls the LLM to generate a response and then hands the conversation back to the flow. Some platforms let you drop LLM steps into the dialog itself, for example, to summarize input or rephrase a question, but the LLM is rarely central to the architecture.

These tools are designed to deploy across multiple channels: web, SMS, WhatsApp, Slack, IVR, and more. They often include built-in connectors for these channels, as well as analytics and testing tools for performance tracking and versioning.

Pros

Built-in support for multichannel deployment

Reliable intent matching and flow management

Governance and monitoring out of the box

Cons

Rigid structure limits LLM flexibility

Setup and maintenance can be labor-intensive

Not ideal for novel or open-ended use cases

These platforms work well when your use case is narrow, repeatable, and needs to run across customer-facing channels. But if you’re trying to build an agent that can reason, adapt, and take meaningful action across systems, you’ll likely run into limitations.

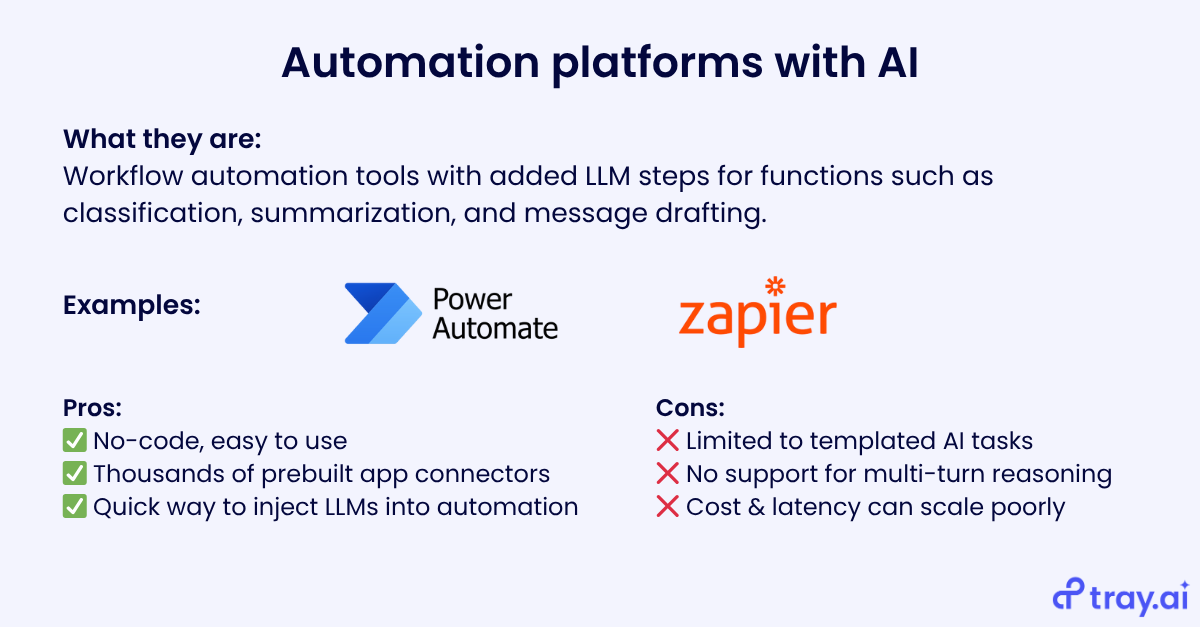

3. Automation platforms with AI enhancements

Best for: Business users who want to add simple AI tasks into structured workflows

Automation platforms like Zapier and Microsoft Power Automate are built to handle routine tasks: move data from one system to another, trigger follow-up actions, send alerts, and so on. They’re event-driven (e.g., “when X happens, do Y”) and typically rely on prebuilt connectors to thousands of SaaS apps.

Now, these platforms are adding LLM steps into the mix. You can insert an AI action to summarize a support ticket, classify an email, or draft a message. In some cases, you can even build full workflows using natural language prompts.

These AI features expand what these tools can do, but they’re still constrained. Most LLM steps are task-specific and based on templated prompts. They don’t support reasoning, memory, or decision-making loops, nor do they function as standalone agents.

Pros

Easy to use, no coding required

Prebuilt connectors to thousands of apps

Fast way to add LLMs to existing automations

Cons

Limited AI flexibility

Not built for multi-turn logic or open-ended reasoning

LLM usage costs and latency can add up at scale

If your goal is to automate basic document handling or decision triage, like routing emails based on tone or tagging inbound requests, this approach works well. But it’s not designed for building agents that can reason, act, and adapt across multiple systems in real time.

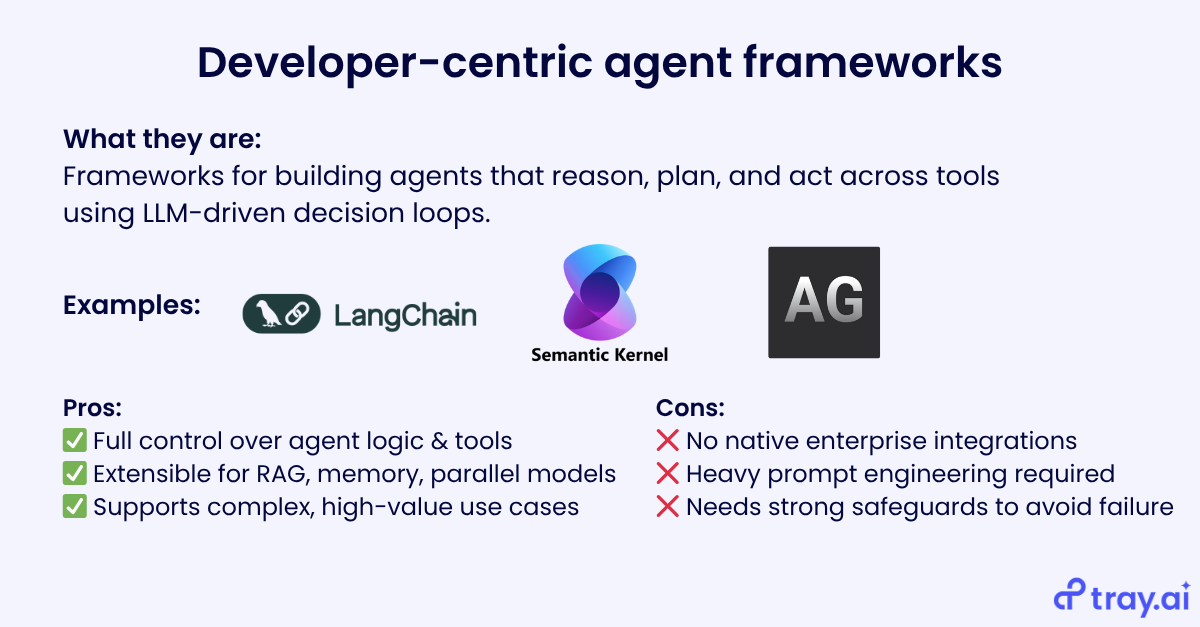

4. Developer-centric agent frameworks

Best for: Technical teams building agents that reason, decide, and act across tools and systems

Frameworks like LangChain, Semantic Kernel, and AutoGen are designed for building autonomous agents: systems that use LLMs not just to generate text, but to plan, decide, and execute multi-step tasks. These frameworks abstract the ReAct loop (reasoning + acting), allowing the model to choose a tool, perform an action, observe the result, and decide what to do next.

You define the tools (e.g., APIs, functions, databases) and provide prompts that teach the LLM how to use them. The framework handles the orchestration: passing outputs back into the model, managing iterations, and sometimes enforcing stopping conditions or safeguards.

They’re model-agnostic and often support both closed APIs (like OpenAI or Anthropic) and open-source models via libraries like Hugging Face. Most frameworks are also extensible. You can bring your own retriever for RAG, define custom memory implementations, or run multiple models in parallel.

Pros

Maximum flexibility and control over agent behavior

Fast adoption of new techniques and prompting patterns

Ideal for complex, highly specific internal use cases

Cons

No built-in integration with enterprise systems

Requires extensive prompt engineering and testing

Prone to failure if tools return unexpected outputs or if the model gets stuck in loops

Significant cost to build and maintain the surrounding infrastructure

Developer frameworks are powerful but require experienced teams to use effectively. They’re best for pushing the limits of agent capabilities when you have the technical resources to support them long term.

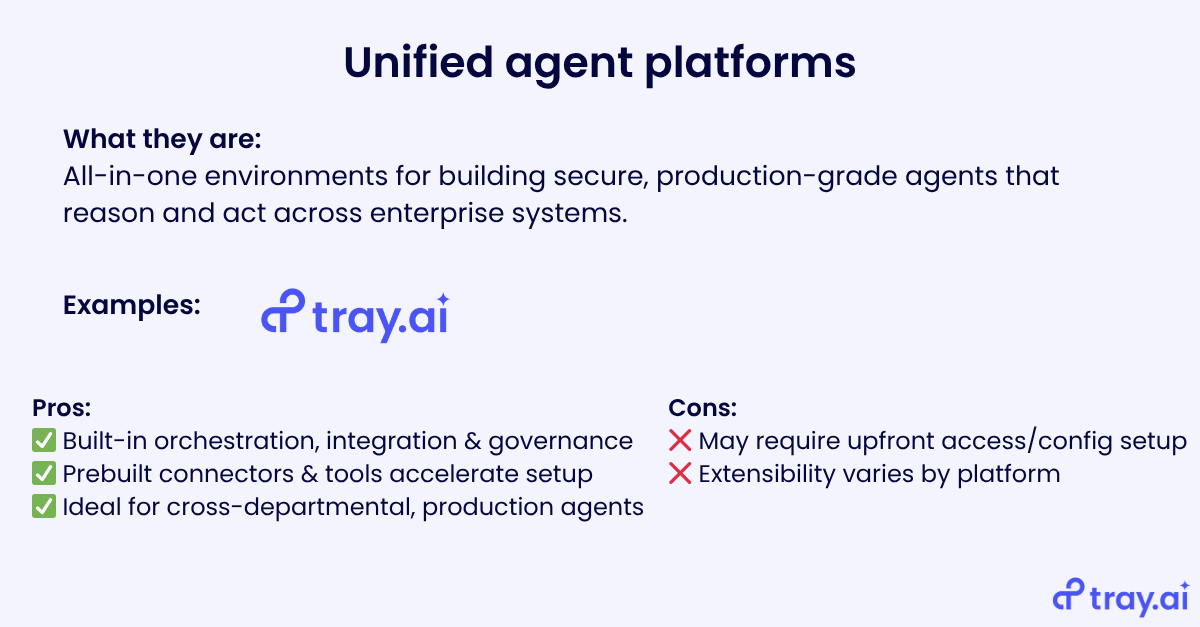

5. Unified agent platforms

Best for: Enterprises needing secure, governed agents that can take complex actions with full context of the use case

Unified agent platforms combine the building blocks of agent development such as LLM orchestration, data integration, tool execution, and governance into a single environment. They’re built for production: acting on internal data, executing across systems, and meeting IT/security requirements.

Unlike wrappers or frameworks, unified platforms include built-in access controls, audit logging, API authentication, and permission-aware execution. Many also include an iPaaS layer for deep integration, ensuring AI agents can act on real enterprise data without brittle, one-off connectors.

Pros

Built-in orchestration, integration, and governance

Prebuilt tools and accelerators to reduce setup time

Designed for production-scale agents across departments

Cons

May require upfront configuration and access provisioning

Flexibility depends on the platform’s extensibility

This is the most complete option for deploying agents that work securely, repeatedly, and at scale without stitching together multiple tools yourself.

Choosing the right approach

There’s no single “right” way to build an LLM-powered agent.

For experimentation or prototypes → Wrappers & frameworks

For structured support workflows → Conversational platforms

For lightweight automation → Automation platforms with AI

For complex, custom reasoning agents → Developer frameworks

For enterprise-scale, secure deployments → Unified platforms

For a deeper dive into different agent deployment options, check out our AI Agent Strategy Playbook.