We believe that Tray builders learn best by doing. That’s why we’ve added an educational module focused on the topic of task usage optimization to the Tray Build Practitioner learning path within Tray Academy–so that you can learn to identify additional inefficient workflow-building patterns and implement changes to optimize them for task consumption.

Tray Academy offers a unique learning experience for builders of all skill levels, with a combination of written and video content, hands-on interactive building exercises, and multiple-choice quizzes to test your knowledge. More information regarding Tray Academy can be found here.

Now let's dive in!

Understanding tasks in Tray

In Tray, a “task” refers to a single connector execution. Every row in your workflow logs’ “steps” panel indicates a task consumption. But estimating task usage isn’t always straightforward.

For instance, a workflow with ten connectors, without any loops, branches, or boolean connectors, consumes ten tasks per execution. If it runs once per day, then the workflow will use 300 tasks every 30 days. Simple, right? However, things get trickier with loops or more complex workflows.

If your workflow involves loops, task usage will largely depend on how often the connectors within the loop are executed. If you are looping over a large amount of data, then even a small workflow can incur many tasks.

Similarly, if your workflow involves complex decision-making logic, with different connectors being executed depending upon various conditions, then task usage will be influenced by how often the various conditions are met.

Two extremes of task usage

Let’s paint a clearer picture by understanding two extreme scenarios of task usage:

The ideal scenario: A workflow that only receives the exact data we wish to manipulate, allowing you to minimize any decision-making logic within the workflow and, thus, only incurring tasks that are "useful". For example, a webhook that always gets one specific type of consistently formatted data.

The wasteful scenario: Here, the workflow is bombarded with unnecessary data, leading to multiple decision-making operations that filter out everything without any significant results. For example, a webhook that continually receives junk data that you don’t intend to process.

Most workflows fall between these two. An unoptimized workflow leans more towards the wasteful end, consuming tasks unnecessarily. Remember, it’s not about consuming fewer tasks, but about maximizing the utility of the tasks consumed.

Let’s walk through a handful of common patterns that can help us identify inefficient task usage and solutions for addressing them.

Identifying inefficient task usage patterns and how to fix them

Pattern #1: Workflows triggering more often than necessary

Sometimes, your workflow might be running more frequently than required, leading to unnecessary task consumption. Let’s dissect this pattern and its types:

Scheduled triggers: This involves setting a predefined schedule for your workflow. However, this can often be inefficient due to:

- Polling schedules even when a webhook event is available.

- Running a workflow every minute when a delay would be acceptable.

Webhook triggers: If your workflow is triggered by a webhook event, inefficiencies can emerge by:

- Listening too broadly to all events.

- Choosing real-time webhook events when batch processing might be a more efficient alternative.

How to identify: To determine whether your workflow is optimized, you should answer some critical questions:

For Scheduled Triggers:

- Is near real-time execution critical or is a delay acceptable?

- Could a webhook replace the current schedule and reduce task volume?

For Webhook Triggers:

- Are you only receiving the necessary webhook events?

- Do you really need instantaneous execution, or can batch processing suffice?

By comparing your workflow against these questions, you can spot potential inefficiencies.

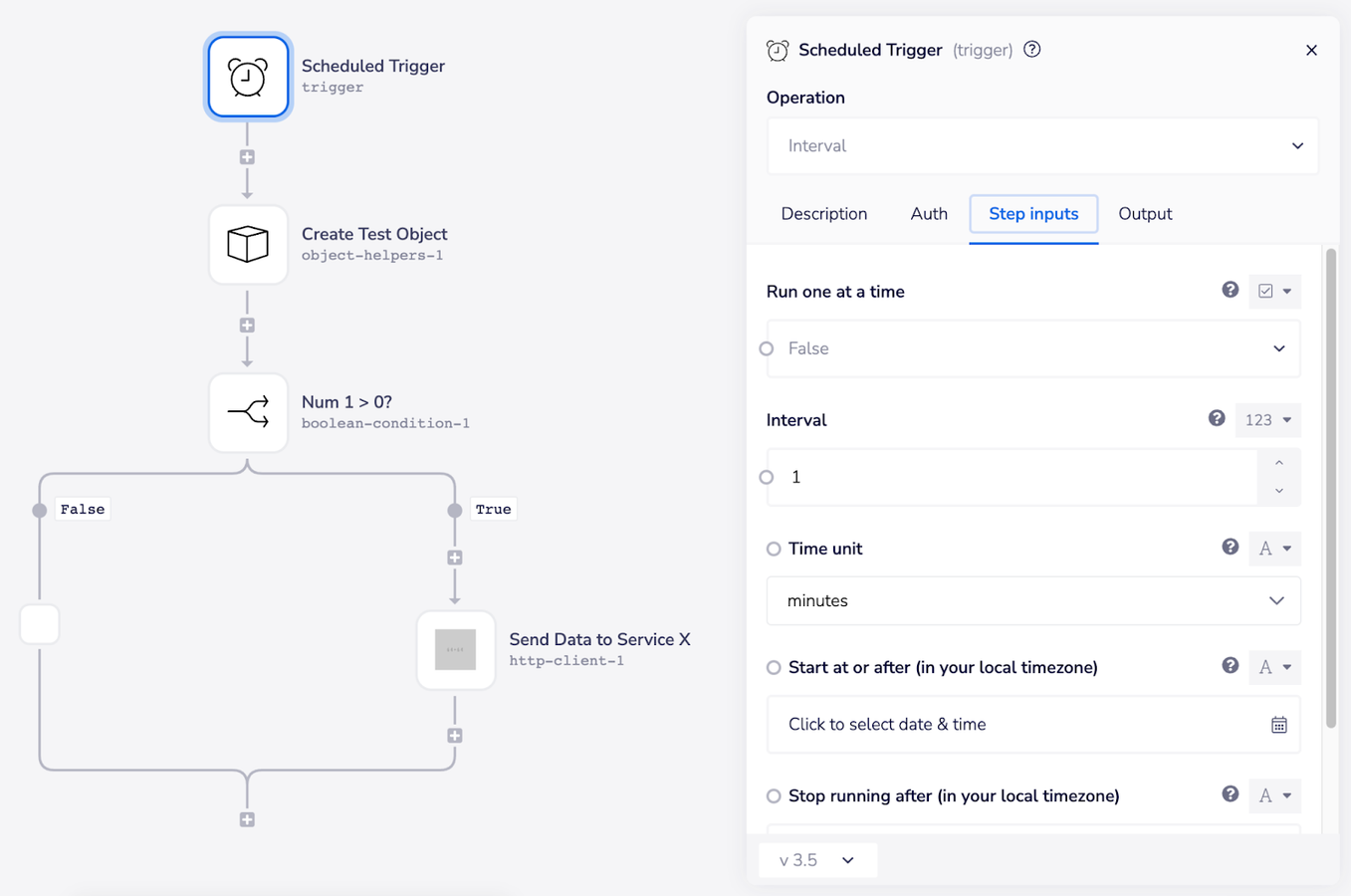

This workflow, which involves retrieving data, evaluating it, and then sending it to a third-party service if the evaluation criteria are met, runs on a scheduled interval of once per minute.

Example: The above workflow runs every minute. While, at most, uses four tasks per execution, it has the potential to consume up to 172,800 tasks in 30 days. Sounds excessive, right? Now, depending on business needs, you can:

- Opt for a 5-minute interval to slash usage to 34,560 tasks — an 80% reduction.

- If only weekend execution is required, the consumption can drop to 86,400 tasks – a 50% reduction.

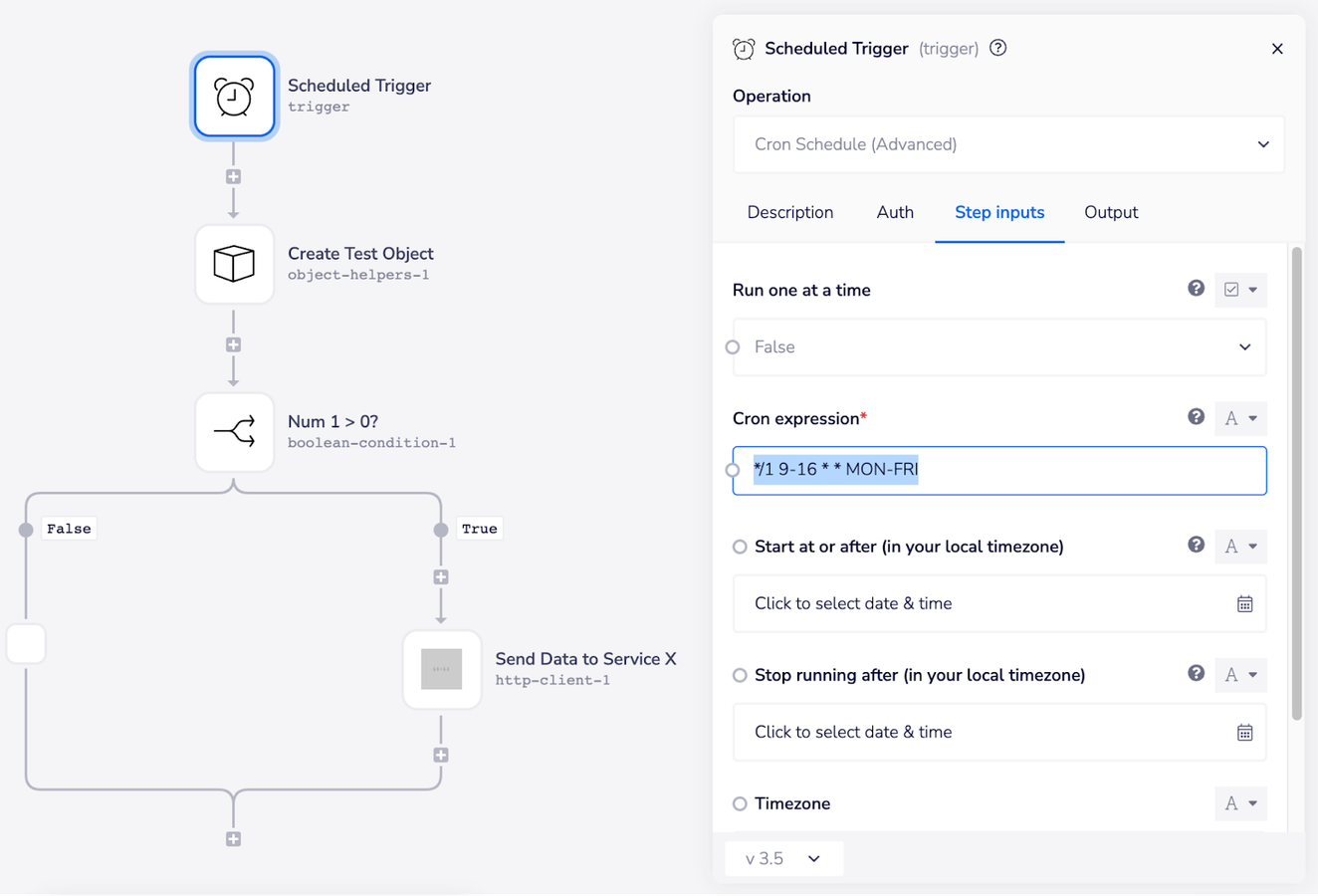

However, if business hours during weekdays are your prime time, advanced scheduling using cron expressions is your solution. This can help you run the workflow every minute from 9 AM to 5 PM, from Monday to Friday, reducing task usage to 72,000 tasks monthly, which is nearly a 60% reduction from the original workflow.

Note the “Cron Schedule (Advanced)” operation in the top right as well as the highlighted text in the properties panel. This expression tells the trigger that it needs to kick off every minute for each complete hour between 9AM-4PM (inclusive, so the last execution is at 4:59) on Monday through Friday.

Cron expressions offer flexibility for precise scheduling. If you're new to them, many online editors can translate plain language descriptions into these expressions, simplifying the task for you.

Pattern #2: Scoping data requests too broad and processing larger than necessary data sets

How to identify: If an action block (i.e. a loop iteration or an entire workflow) completes without performing any useful function, there is likely wasted task usage that you can mitigate. In particular, if any boolean conditions within a loop have paths that result in the loop going immediately to its next iteration without performing any work, you probably have wasted tasks.

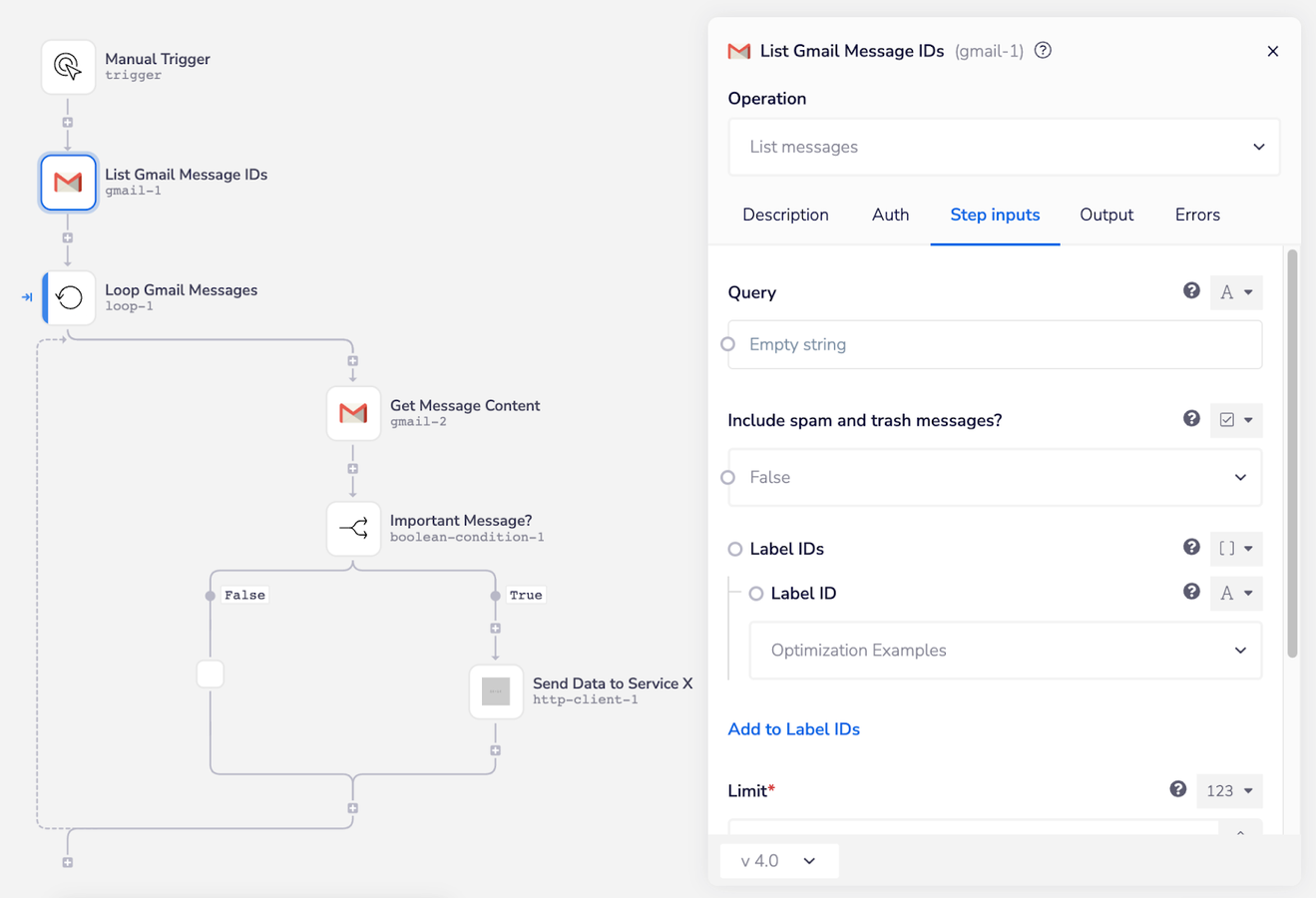

Example: Let’s consider a workflow that lists Gmail message IDs. The workflow seen below retrieves the content of each email by its ID and checks if the email is “important” based on its subject line. If deemed important, it’s forwarded to another service, referred to as “Service X” in the screenshot below.

In this example workflow, an “unimportant” email causes the loop to iterate without performing any useful work, leading to wasted task usage.

Previously, this process would take:

- Three tasks when the email isn’t important (loop-1, gmail-2, and boolean-condition-1).

- Four tasks when the email is important (loop-1, gmail-2, boolean-condition-1, and http-client-1).

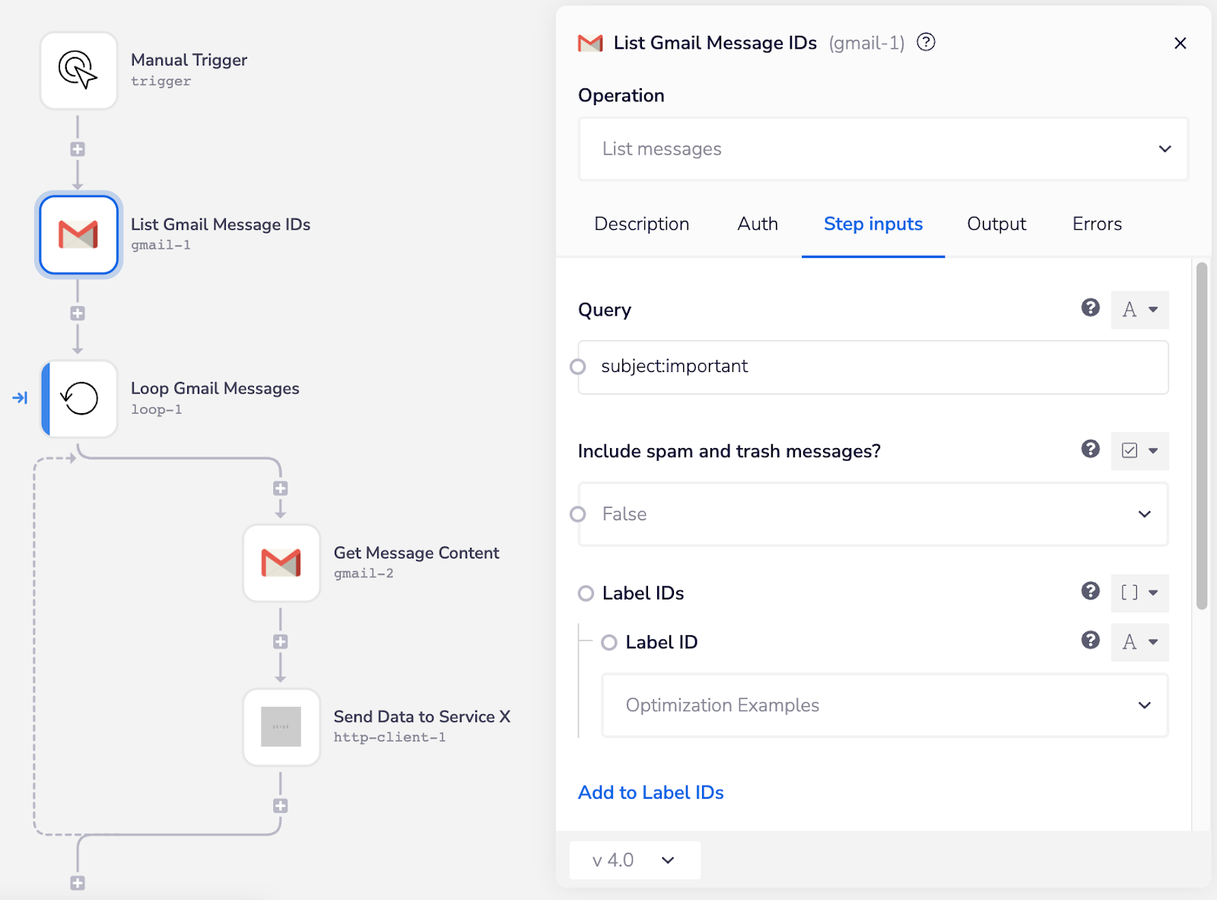

However, an optimized workflow would utilize Gmail’s “Query” feature to filter emails with “important” in the subject line, removing the need to check each email’s importance within the loop.

Our optimized version of the workflow utilizes Gmail’s query functionality, allowing us to remove the boolean condition from the loop.

In this version of the workflow, we made two key changes:

- We utilized Gmail’s “Query” functionality to request only IDs for messages with “important” in the subject line.

- As a result of using the Query functionality, we were able to remove the boolean condition from the loop.

This small change results in significant task savings:

Original Workflow Task Consumption: 706 tasks (for 50 important and 50 unimportant emails processed twice a month).

Optimized Workflow Task Consumption: Just 306 tasks under the same conditions.

That’s a reduction of nearly 57% in task consumption for this single workflow.

Note: If you can’t filter data at the source, you can still reduce task usage by filtering the list before initiating the loop.

Pattern #3: Redundant Connector Usage

In this pattern, unnecessary task consumption results from not streamlining operations using connectors. In essence, the workflow uses more connectors than required, thus consuming more tasks.

For example, using multiple boolean connectors sequentially to filter data, instead of integrating multiple conditions in a single boolean connector can lead to wasted task usage.

How to identify: Observe if boolean connectors are nested within others. If multiple nested connectors result in identical outcomes, there's potential wastage. Also, check if multiple service connectors fetch different data pieces when a single operation can retrieve them.

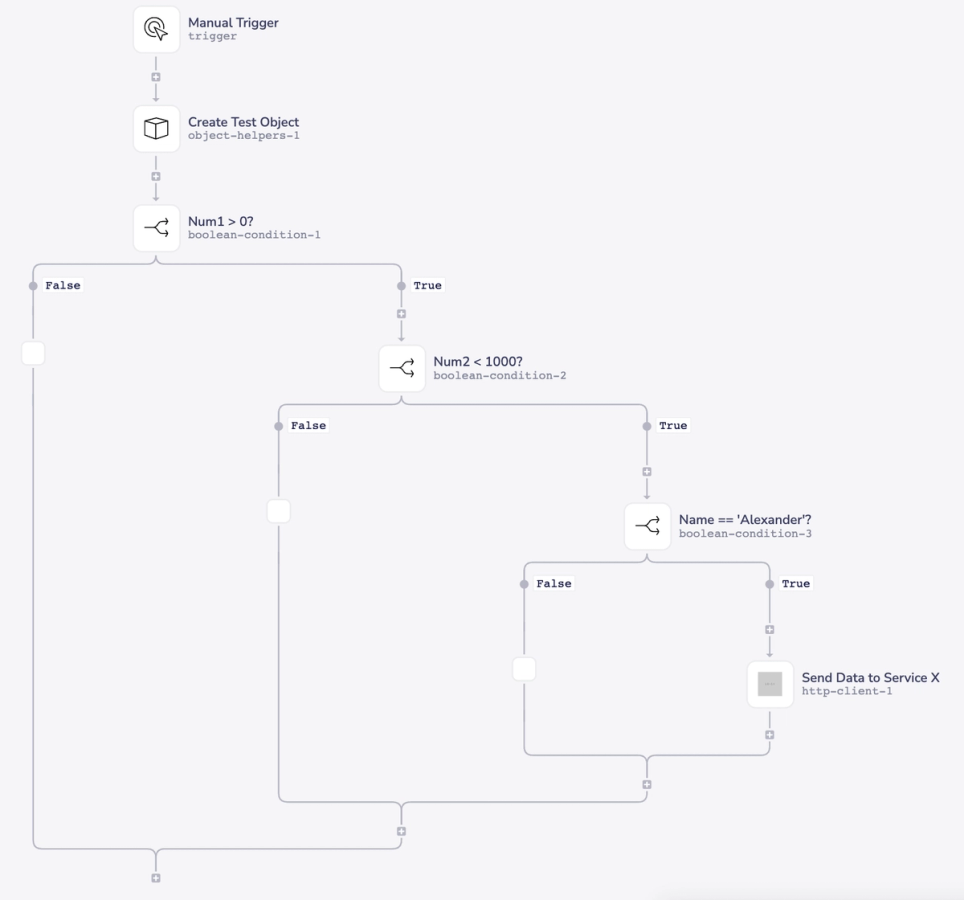

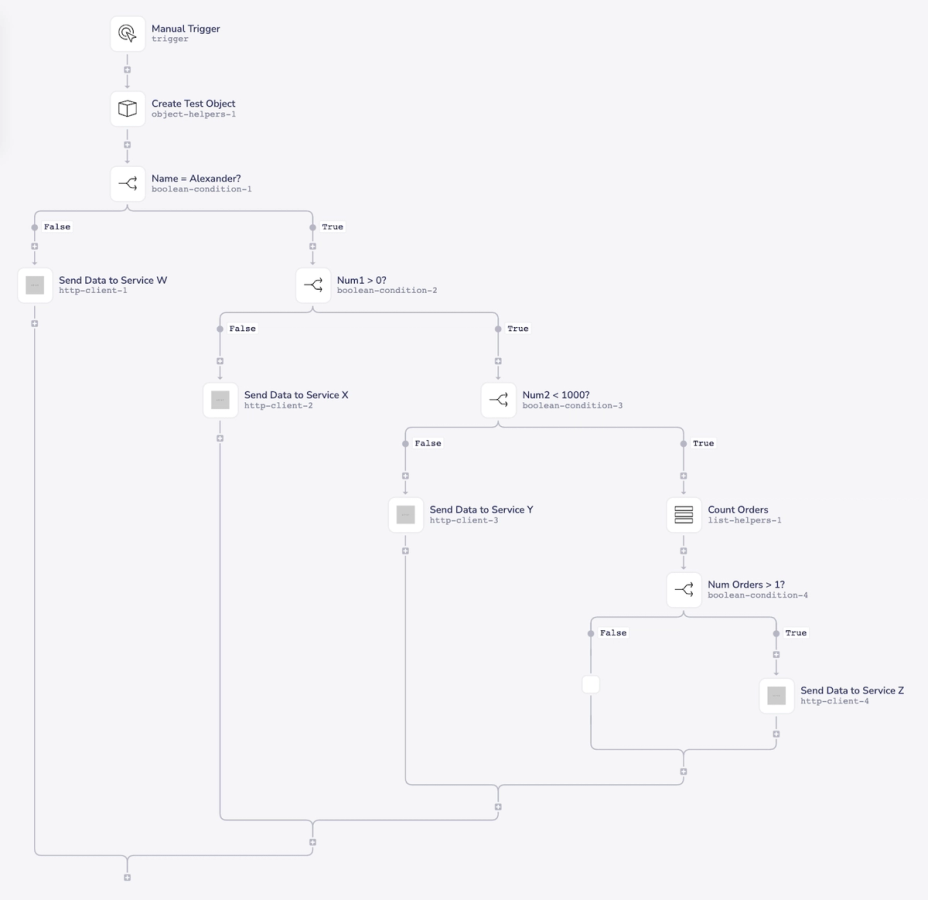

Example #1: The following workflow showcases evaluating data against several conditions, and then performing an action when all conditions are met. This example uses multiple booleans nested together, resulting in several paths leading to identical outcomes. Such nesting may consume anywhere from three to six tasks per execution.

In this example, we are creating a test object with multiple fields (Num1, Num2, and Name), and then sending data to Service X when all three boolean conditions evaluate to True.

With no useful work performed on any of the “False” branches, all three of those paths are potentially wasting tasks. If this workflow runs once per hour, then we are potentially using 4,320 tasks every 30 days.

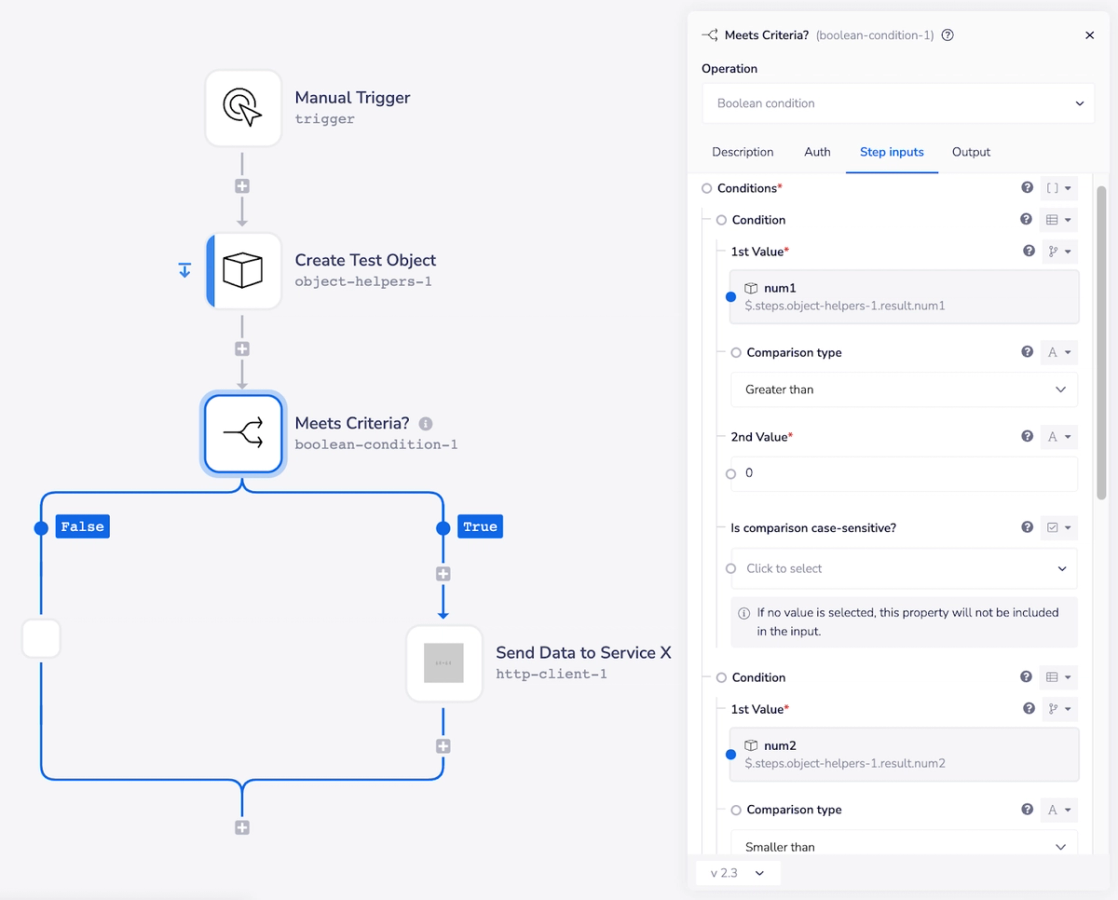

An optimized version of this workflow employs Tray’s boolean condition connector to evaluate multiple conditions at once, with the option to require all conditions be satisfied, or any conditions be satisfied. This change reduces the number of tasks per execution from six to four.

The first condition and most of the second are visible here on the right. You can scroll down in the properties panel to find the “Satisfy ALL conditions” option within our boolean connector.

We’ve also collapsed the scenarios where the workflow would previously have used four or five tasks down into always using three tasks. By running this workflow once per hour, we’ve managed to reduce the potential task consumption by about 33%.

Example #2: This more advanced example introduces additional complexity, as actions vary based on branch structures. Hence, simplifying boolean connectors is not as straightforward.

In the following unoptimized workflow, we are evaluating boolean conditions similar to the previous example, but with some new complexity. In addition to the original three conditions, we’re also using a List Helpers “Count Items” operation to determine the number of items inside the “Orders” list contained within our data:

On top of the fourth condition, we’re also performing a different action on every single branch of this structure (sending data to services W, X, Y, and Z, or doing nothing). Because of this, we cannot simply collapse it all down into a single boolean connector with multiple conditions.

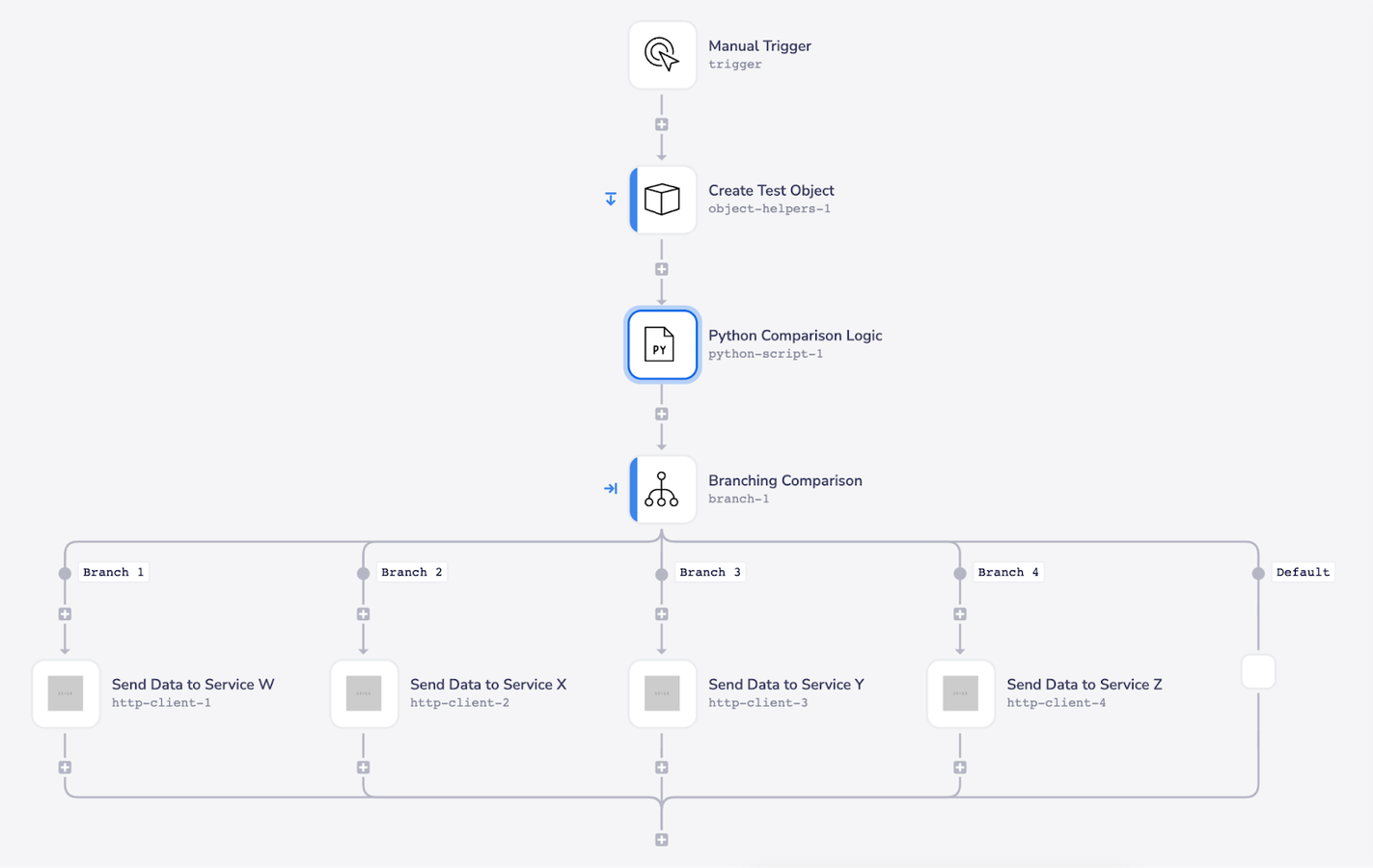

There’s no simple solution for optimizing workflows like this. Using Tray’s in-line coding capabilities, it’s always possible to consolidate logical operations down into a single connector, but this is not always the most task-efficient strategy. Here’s a look at what this kind of consolidation can consist of:

In this example, we’ve written a Python script to perform all of the logical operations that we were previously accomplishing with boolean connectors and list helpers.

The script accepts values from our input data and returns a different output depending on which conditions were met. In order to make it easier to directly compare this script against the original nested boolean structure, it’s shown below as a series of if statements:

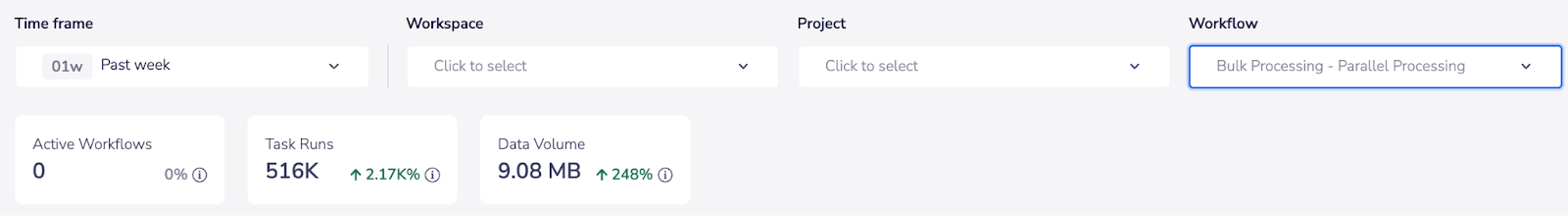

To see if task usage has improved, you'll need to average your task usage per run. Using Tray’s Insights hub accessible from the Tray dashboard, filter by the last week and choose the relevant workflow.

This displays the total task runs for the week. Divide this by the workflow’s execution number. If the result exceeds our estimated task usage for the “optimized” workflow (5 tasks per execution in this case), it signifies an improvement in efficiency.

However, this might not always be true. For instance, if the first boolean condition in the original workflow is “False” 99% of the time, it leads to a usage of four tasks almost always. Even if 1% of runs took the 8-task route, the average task usage would be 4.04. Meaning the “optimized” workflow doesn't lead to reduced consumption.

Efficiency is a delicate balance

Optimization is more than just a measure of task efficiency – it’s a delicate balance between efficiency and effort. The position you and your team occupy on this spectrum will be influenced by your specific performance requirements, as well as the maturity of your automation skillsets. As you evolve, you’ll find yourselves moving along this spectrum, continuously adjusting and readjusting based on the needs of individual automation projects. Therefore, it’s important to engage in discussions with your team, identifying and labeling the efficiency benchmarks needed for each task.

Remember: optimizing workflows is both an art and a science. While the patterns outlined here address common inefficiencies, they don’t capture all potential improvements. There are multiple strategies one could employ, such as combining workflows with identical triggers or merging a workflow’s logic from a “call workflow” connector. However, remember to proceed with caution, especially with the latter, to maintain workflow compartmentalization.

At the heart of these optimization opportunities are a couple of core principles:

- Multiple connectors can be condensed into fewer ones.

- Any connector that doesn't contribute to data processing should be removed.

Yet, when it comes to building workflows, efficiency is a balance between optimization and comprehensibility. Writing scripts can indeed make a workflow more efficient, but it might come at the cost of readability and manageability. Depending on the expertise of your team, diving too deep into efficiency might hinder more than help. Sometimes, a slight inefficiency can be beneficial for the team in the long run, ensuring that workflows remain accessible and easy to manage. Ultimately, it’s a delicate balance, and the responsibility lies with your team to strike the right chord that aligns with your skills and priorities.

If you have any questions about task usage optimization, building workflows, or anything at all, please don’t hesitate to reach out in the Tray Community.