If you're in marketing, sales, revenue operations, or customer support, you won't be surprised to learn that 58% of B2B firms rely on live chat tools, like Intercom or Drift. With these tools, teams can engage new leads from their website with real-time information about pricing or features while customer support representatives facilitate discussions with customers to drive retention by rapidly providing support for their technical needs.

Today, many creative firms are introducing features like automated chat bots, instant response times, and other interactions that are deeply integrated with the customer journey. But your live chat strategy is only as good as the data that informs it. You might want to know the answers to questions like:

- What are your prospects asking about the most, and how can you better surface that information on your website?

- How do response times correlate with issue resolution and customer satisfaction?

- And how can you disseminate valuable data from these interactions across your tech stack to promote a cohesive customer journey?

To tap into these insights, you’ll need to pipe data from your live chat tool into a platform like a data warehouse that can segment and analyze information at scale. But the custom integrations that power data transfer between platforms traditionally require developer resources or technical expertise, limiting the capabilities of marketing and support teams to take control of their data. However, we’ll show you how you can craft a process that flexibly extracts and manipulates data from your live web chats for easy segmentation and analysis in your data warehouse, all with an easy-to-use, low-code platform.

With an automated data transfer process in place, you’ll have access to searchable chat logs, response data, customer context, and more in your data warehouse. Here are just a few of the ways you can use that data to convert and retain customers:

- Monitor for popular questions or keywords, chat response times, and more using business intelligence (BI) dashboards like Looker or Sisense.

- Fulfill complex customer requests using a super-charged chatbot powered by

- Natural Language Processing (NLP).

- Boost your average customer ratings and net promoter score by sending automated outreach to customer advocates.

To implement this data upload process and all of the others we’ve mentioned, we used our low-code platform for integrating cloud-based applications and creating multi-step automated processes. Here's how:

How to automate data transfer from live chat tools

First, we’ve separated our walkthrough into 3 stages:

- Creating a scheduled export job - We run this workflow every night to request a new export job from our live chat tool (like Intercom or Drift). If a request is successful, we proceed to the next step. If a request isn’t successful, we send an alert to stakeholders in our internal chat tool (like Slack or Microsoft Teams).

- Waiting for confirmation - Here, we wait until the export job is complete and proceed to the next step. This stage helps prevent issues with delays in export times and ensures we retrieve the full dataset.

- Formatting and loading data - Finally, we transform the data with logic helpers and upload it to our data warehouse, Redshift.

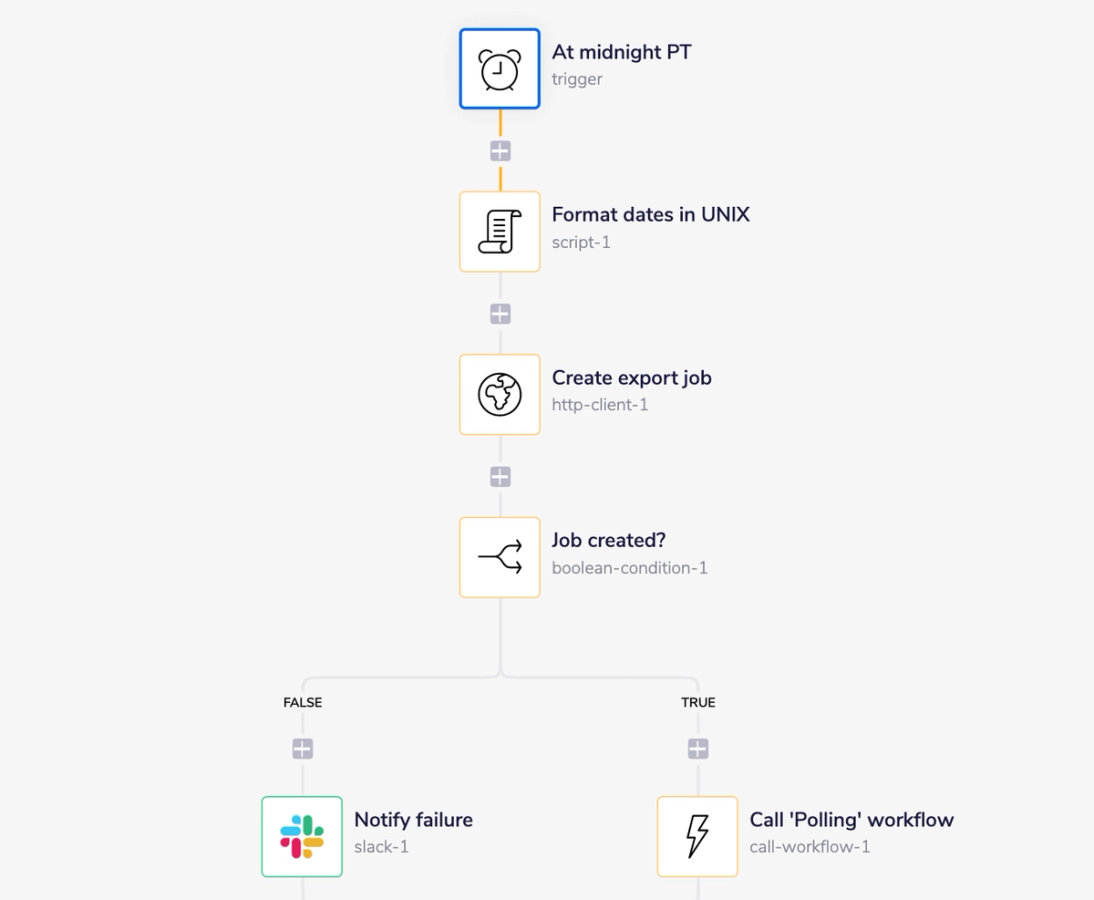

Stage 1: Creating a scheduled export job

To kick off this workflow, we want to submit a data export request to our live chat tool on a predetermined cadence. This stage ensures that we’re successfully pulling all information without relying on tedious, manual exports.

Scheduling and confirming a new export job

At midnight PT - We start with a scheduled trigger, where we define the frequency of this workflow. We chose to run our data transfer every night at midnight Pacific Time (PT), but we can customize this step to run at whatever interval makes sense for our team.

Format dates in UNIX - Next, a simple script helper lets us format the date and time from our scheduled trigger into UNIX time, the format accepted by our live chat tool. This step outputs a formatted date range, which we use to define the data we want to collect.

Create export job - Using our formatted date range, we then query our live chat tool to create a new export job. We submit this request using our universal HTTP connector, which can push or pull data from any cloud-based service that has an open data source.

Job created? - Here, we want to confirm that we successfully created a job to prevent our workflow from running without a new batch of data. To do this, we use Boolean (true/false) logic that routes our workflow to one of two outcomes:

- If we didn’t create a job, then we notify any relevant stakeholders that there was an unexpected job failure. This alert gives our team time to troubleshoot any issues and opt for a manually-triggered backup (we’ll cover what a manually-triggered backup looks like at the end of the post).

- If we created a job, then we use a call workflow helper that starts the next stage of our workflow.

Now, our export job has started, but we still need to process, format, and load our data. Over the next two stages, we’ll confirm that our job is complete and pipe that data to our data warehouse.

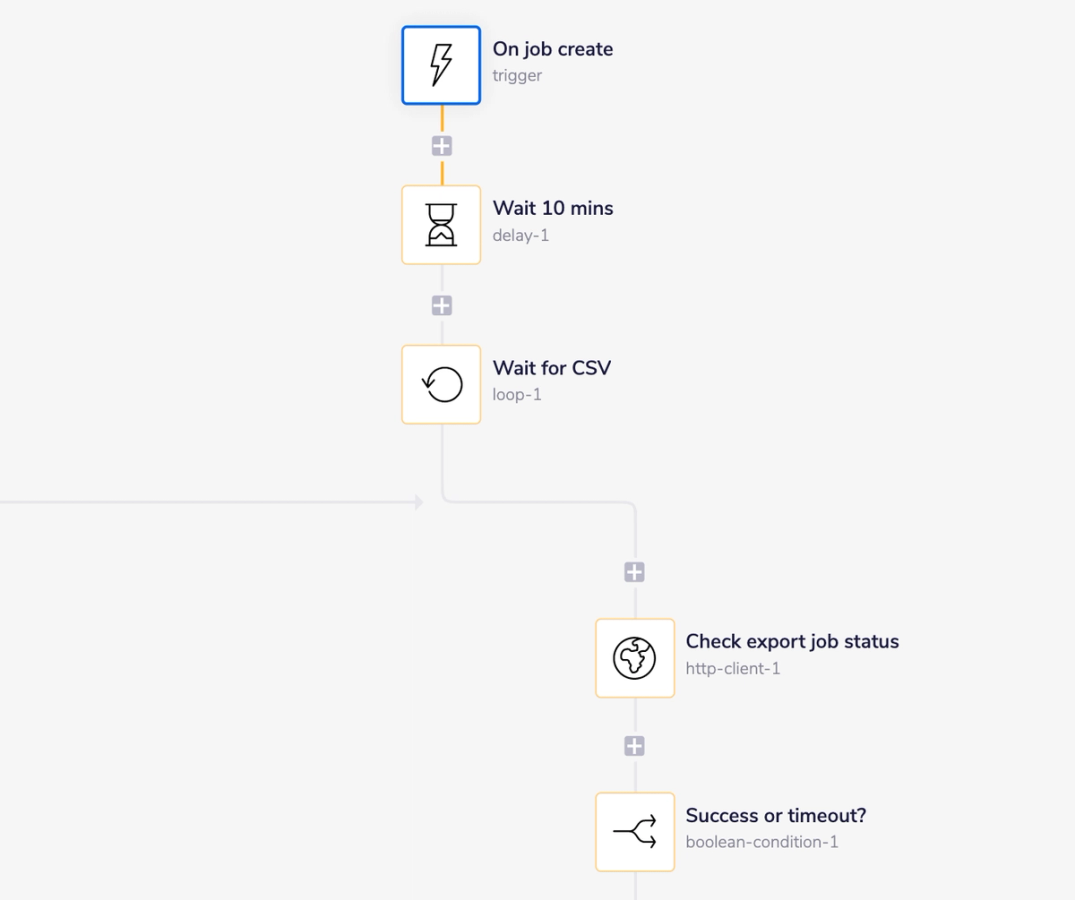

Stage 2: Waiting for confirmation

The second leg of our workflow listens for confirmation from our live chat tool’s API that our export job is complete. This stage plays an important role in making sure we capture all chat data within our predetermined range and alerts stakeholders if there are any issues.

Waiting for successful export confirmation

On job create - Our callable trigger listens for a response from our first stage. When it receives a response, it kicks off the following logic with a 10 min delay to give the export job some time to wrap up.

Wait for CSV - After 10 minutes, our workflow enters a loop that waits until the job is complete. For each run of our loop, we first check the status of the export job. Then, we confirm if the job is complete or still needs time to run.

Checking for successful export

Wait 10 mins - If the job is not complete and we haven’t exceeded a 200-minute timeout (calculated by multiplying the number of loops by the 10-minute delay), we wait another 10 minutes and try again with another loop.

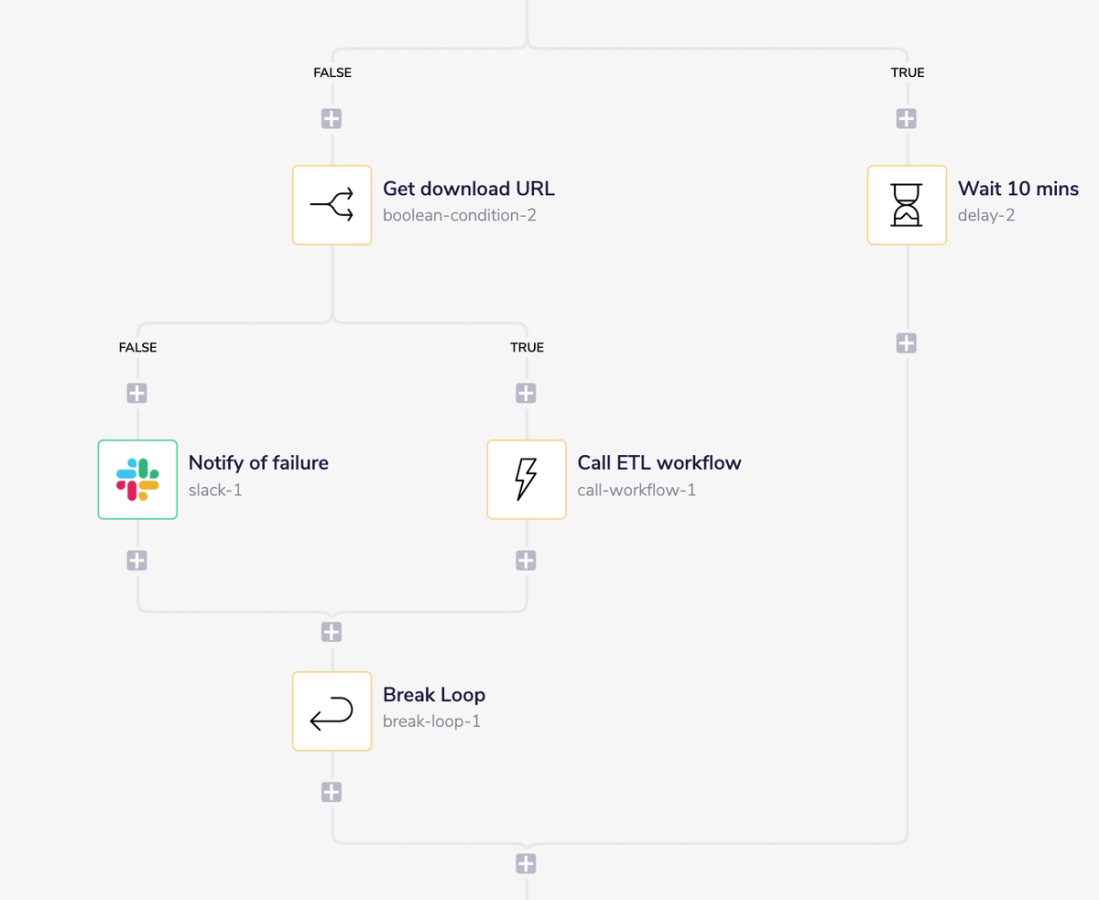

Get download URL - If the job is complete or we have exceeded our timeout, we run our workflow through another Boolean condition that checks if a download URL is available.

- If we don’t find a download URL, then we send a timeout failure message to any relevant stakeholders. This alert is yet another line of defense in our workflow to prevent missing data.

- If we find a download URL, then we use a call workflow helper to signal to our last stage that the export job is complete.

After the workflow registers a completed job or a timeout, it ends the loop with a break loop helper.

Stage 3: Formatting and loading data

Now that we have a list of raw live chat data, we need to prepare it before we load the data into our data warehouse. We’ll walk you through how we prepare these lists; However, it’s important to remember that you can customize this logic to meet the needs of your use case.

Preparing and paginating list of live chat data

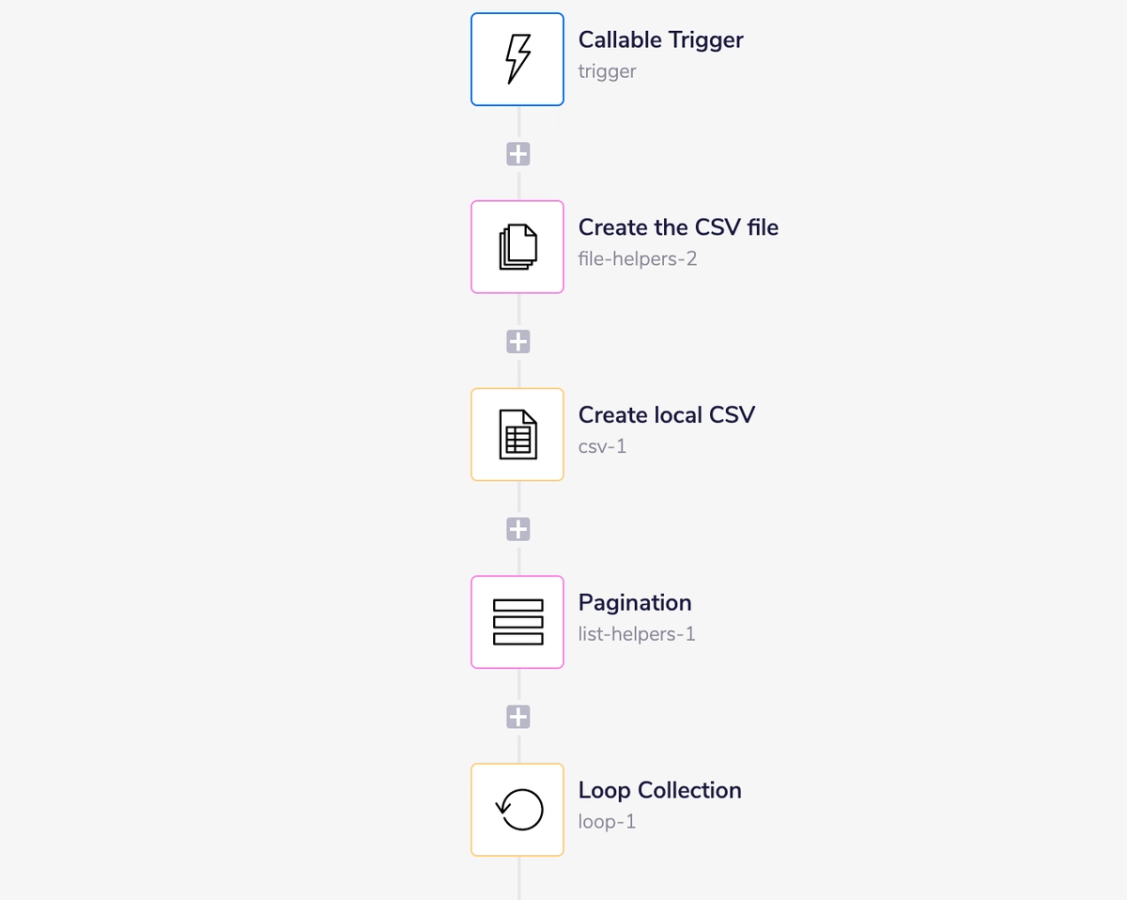

Callable trigger - Once more, we use a callable trigger to listen for a response from our second stage, which confirms that our list is ready.

Create CSV file - Next, we create a CSV file from the download URL provided by our export job. This file contains our unformatted list.

Create local CSV - Then, we clone our list into a temporary CSV, which we’ll use to make the formatting edits before we load it into our data warehouse.

Pagination - Here, our list helper paginates our list into pages of 7,500 rows to separate the data into more manageable units.

Loop pages - With our CSV file prepared, we now parse each page to apply the following logic. Our loop concludes once we’ve processed all of the pages of our CSV.

Formatting and loading list of live chat data

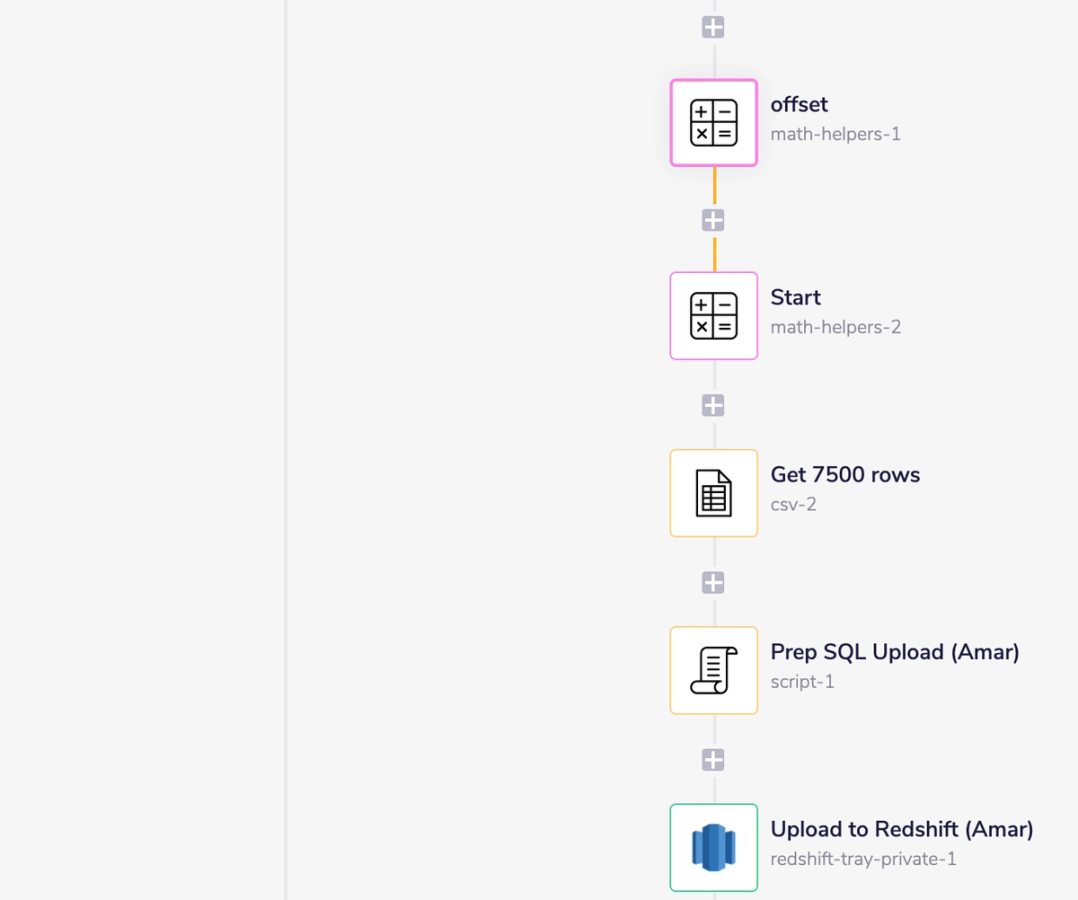

Math helpers - Using two math helpers, we calculate the starting value for each batch to ensure we don’t load duplicate data.

Get 7500 rows - From our starting value, we get the next 7,500 rows of data from our CSV.

Prep SQL upload - Here, we use another script helper to run our list through a brief SQL query that labels and formats our data.

Upload to Redshift - Finally, we upload this page to our data warehouse. We’ll conclude the workflow when we’ve added all of the pages.

Fuel data-driven insights and campaigns with automation

You just extracted, transformed, and loaded a list of live chat data into your data warehouse using low-code general automation. Now, you can easily slice and dice datasets to track metrics like chat length and commonly-used keywords using business intelligence tools.

But what if you need to upload a missing set of chat data ad hoc? If you want more control over when you upload lists of data, check out the bonus stage where we’ll show you how to set up a manual upload.

Bonus Stage: Manually triggering uploads

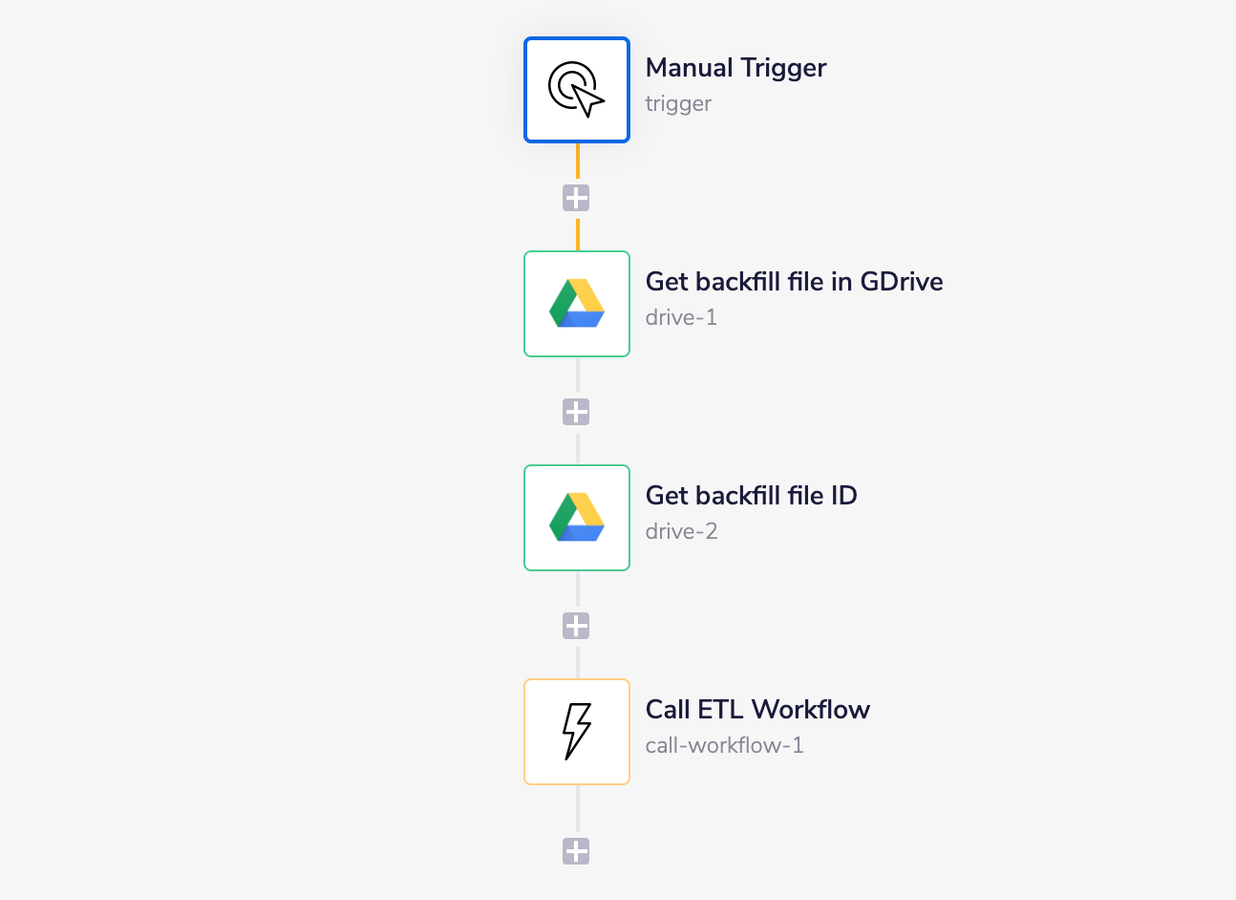

Manually triggering data uploads

Before our upload process begins with a manual trigger, we first want to upload the CSV file of data into our cloud-based storage tool (we use Google Drive). Once the file is ready, we run the workflow to pull data from the file and the file ID, and trigger the third stage of our list upload workflow using a call workflow helper. Easy as that!

With an automated data manipulation workflow like the one we’ve discussed, you can maximize lead conversions and minimize customer support wait times by fueling data-driven insights. If you’re interested in seeing more ways that you can use automation to improve revenue processes across your tech stack, watch and request a demo.