Large language models (LLMs) are quickly becoming core infrastructure inside the enterprise. Instead of just powering chatbots for answering simple questions, they’ve been promoted, now sitting behind AI agents that reason over data, make decisions, and take action across systems.

To understand how agents differ from other AI applications like copilots and chatbots, see our comparison of AI agents vs copilots vs chatbots for enterprise use.

But as teams move from experimentation to production, one question comes up again and again:

Which LLM should we standardize on?

In this blog, we’ll compare the “big three” LLMs businesses are evaluating: ChatGPT vs Claude vs Gemini. We’ll look at each vendor’s capabilities through an enterprise lens, specifically for organizations building or operating AI agents. We’re not here to crown a winner, but to help teams understand how these models differ in practice when security, governance, integration, and long-term operability matter.

What you are really choosing when you pick an LLM

For enterprises, selecting an LLM vendor is more complex than selecting software to solve a business problem. Essentially, you’re choosing an operating model.

Each provider brings a different approach to data handling, admin controls, rate limits, versioning, and integration surfaces.

For a broader framework on evaluating LLMs in the enterprise, including security, governance, and operational constraints, see our comprehensive guide to enterprise LLMs.

Those choices affect how easily a model can be embedded into agent workflows, how safely it can interact with internal systems, and how predictable it will be to run at scale.

For teams building agents, the model is only one layer in a larger system. The harder problems usually show up around orchestration: deciding when to retrieve data, when to call tools, how to enforce permissions, and how to observe what happened after the fact. That context matters when comparing vendors.

How enterprises should evaluate LLMs for agent use cases

Before looking at specific vendors, it helps to clarify the criteria that actually matter for agent workloads.

LLM performance

Performance is not just about raw reasoning ability. It shows up in how reliably a model follows instructions, how consistently it selects the correct tool, and how well it produces structured outputs when downstream systems expect them. Long context support can be useful, but quality and stability matter more than headline token counts.

LLM security

Security and data handling are foundational. Enterprises need clear answers about where data is stored, how long it is retained, whether prompts or outputs are used for training, and how those settings can be controlled at the organization level.

LLM governance

Governance and operational readiness often become the deciding factors. That includes admin controls, auditability, observability, version pinning, and deprecation timelines. Agent systems tend to live longer than pilots, so surprises in these areas can be costly.

LLM integration

Integration and actionability are what separate agents from passive applications. Tool calling, permission models, and the ability to safely execute actions across systems all influence whether an LLM can move beyond suggestion into execution.

Finally, there are commercial realities. Rate limits, quotas, and cost predictability matter more than list prices once agents begin handling real workloads.

With that framing in mind, the differences between ChatGPT, Claude, and Gemini become clearer.

ChatGPT (OpenAI) in enterprise agent environments

OpenAI’s models are often the default starting point for enterprise teams. The ecosystem is mature, the APIs are widely supported, and many tools and frameworks are built with OpenAI compatibility in mind.

For agent workloads, ChatGPT models tend to perform well at instruction following, structured output generation, and tool invocation. The function calling interface is well understood, which makes it easier to design agents that interact with APIs in a predictable way.

From an enterprise standpoint, OpenAI offers business and enterprise tiers with configurable data retention and opt-out controls for training. Admin tooling and policy controls have improved over time, though enterprises still need to pay close attention to rate limits and usage patterns when agents operate at scale.

Where OpenAI often fits best is in environments that value broad ecosystem support and fast iteration. Many organizations start here, then layer additional controls and orchestration as usage grows.

Claude (Anthropic) in enterprise agent environments

Claude tends to appeal to teams that want tighter data controls and fewer surprises in production. Anthropic has been explicit about training opt-in policies and enterprise data usage, which resonates with organizations operating under stricter compliance expectations.

For powering agents, Claude models are often used for tasks that involve careful summarization, policy interpretation, or long-form reasoning. Tool use is supported, though teams sometimes spend more time tuning prompts and schemas to achieve consistent behavior.

Claude tends to show up in enterprises where risk tolerance is lower and predictability is valued over experimentation. It is less about flashy capabilities and more about dependable behavior within defined boundaries.

Gemini (Google) in enterprise agent environments

Gemini’s strength is its proximity to the Google ecosystem. For enterprises already standardized on Google Cloud or Google Workspace, Gemini can feel like a natural extension rather than an external dependency.

From an agent perspective, Gemini models can be effective when agents need to operate close to existing data and services within Google’s environment. Admin controls, identity integration, and data residency options benefit from tight alignment with Google’s broader cloud governance model.

Gemini is often considered when integration simplicity and existing cloud alignment matter more than maximizing cross-platform flexibility. In heterogeneous environments, teams may need additional orchestration layers to balance Gemini with other models.

TL;DR: How these models compare at a glance

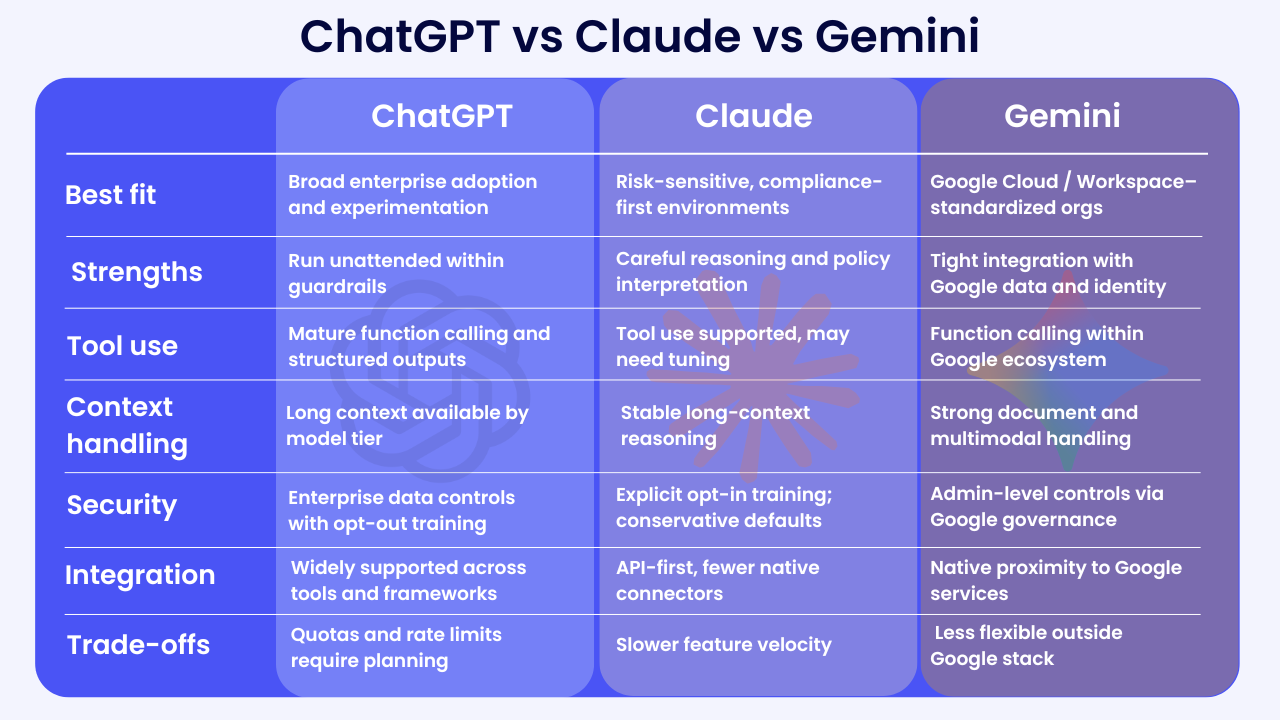

At a high level, most enterprises end up seeing the trade-offs this way:

- OpenAI offers broad ecosystem support and strong agent tooling, with careful attention required around scale and quotas.

- Anthropic emphasizes data controls and conservative behavior, appealing to risk-sensitive deployments.

- Google focuses on tight integration with its cloud and productivity stack, which can simplify governance for Google-centric organizations.

Very few organizations standardize on just one model forever. As agent use cases expand, different workloads tend to pull teams toward different choices.

For teams evaluating ChatGPT vs Claude vs Gemini, the differences become clearer when looked at through enterprise constraints. A ChatGPT vs Claude comparison often comes down to ecosystem breadth versus conservative data handling. A Claude vs Gemini comparison typically reflects governance posture versus cloud-native integration. These tradeoffs matter more in agent workloads than in standalone chat use cases.

Matching models to common enterprise agent scenarios

In practice, model choice often varies by use case.

For IT service management or internal support agents, structured outputs and reliable tool calling usually matter more than creative language. ChatGPT or Gemini often show up here, depending on the surrounding systems.

For HR or compliance-sensitive workflows, where interpretation and policy adherence are critical, Claude is frequently evaluated due to its conservative defaults.

For back-office document processing or analytics narration, cost predictability and batching behavior can outweigh marginal quality differences, leading teams to mix models depending on workload volume.

These patterns reinforce an important point: model choice is rarely static once agents move into production.

As enterprises adopt LLMs for workloads, teams must also think about architectural patterns, from simple API workflows to fully orchestrated multi-agent systems. For a practical look at common approaches to building and scaling LLM-powered agents, see our overview of five architectural strategies for agents.

Why orchestration matters more than the model itself

As soon as an enterprise runs more than one model, complexity multiplies. Different retention policies, rate limits, tool interfaces, and failure modes create operational friction.

This is where orchestration becomes essential. Enterprises need a way to route requests to the right model, enforce consistent governance, manage tool access, and maintain an audit trail across model boundaries. Without that layer, teams spend more time reconciling AI behavior than delivering value.

From an enterprise perspective, the strategic question shifts from “Which LLM is best?” to “How do we operate multiple LLMs safely and predictably across the business?”

Making a decision without over-optimizing

A practical way to approach this comparison is to start with constraints rather than preferences. Data sensitivity, compliance requirements, existing cloud investments, and expected workload shape usually narrow the field quickly.

From there, teams can test models against their own data and workflows, measure reliability, and plan for change. Treating LLM selection as an ongoing program rather than a one-time decision reduces risk and keeps options open as the landscape evolves.

From model choice to enterprise readiness

ChatGPT, Claude, and Gemini each bring unique strengths to enterprise AI programs. None of them, on their own, solve the challenges of integration, governance, and scale that define agent deployments.

Enterprises that succeed with AI agents focus less on picking a single “best” model and more on building an architecture where models can be swapped, governed, and orchestrated as needs change.

That perspective is what turns LLMs from promising tools into durable enterprise infrastructure.

In practice, this is why many enterprises invest in an orchestration layer alongside their models. Platforms like Merlin Agent Builder are designed to support this reality, allowing teams to select, swap, and combine LLMs based on specific use case requirements rather than locking into a single provider. By decoupling agents from any one model, organizations can adapt as capabilities, costs, and constraints evolve, without having to rebuild their systems from scratch.